This book can best be described as an examination of formal epistemological methods driven by a serious confrontation with Cartesian skepticism. Shogenji argues that contemporary epistemologies fail to vindicate belief in the natural world in the face of such skepticism. This motivates him to develop a new formal approach to epistemology, which he argues has the resources for rebutting the skeptic. He describes his project as "meliorative epistemology," engineering our concepts and methods in order to improve our epistemic lot, in contrast with "epistemography," accommodating and describing pre-theoretic epistemic judgments. In this work, Shogenji ultimately offers a "defense of belief in the natural world" grounded in an originally engineered formal epistemology.

This book discusses an assortment of topics the reader might not be expecting to encounter, but each of which Shogenji shows to be connected in interesting ways to the challenge of skepticism. There is a chapter "debunking the myth" of epistemic circularity, which Shogenji includes for the sake of pinpointing the exact challenge of Cartesian skepticism (demonstrating that such skepticism is not driven by any circularity). Other topics explored in more or less detail include the correspondence theory of truth, representational accounts of perception, epistemic foundationalism and internalism, epistemic closure, and the old evidence problem. There are also some unfortunate absences. I was surprised at the lack of discussion on interpretation of probability and the extent to which Shogenji's case is sensitive to choice of interpretation. At various points, the book draws upon distinct interpretations of probability, with readers left to distinguish and parse out the different notions for themselves. On a more practical note, this book was lacking a short introduction to probability theory, which would instantly make it more accessible and illuminating to a wider audience. These minor concerns aside, the book is a formal epistemological delight. Shogenji's work is characteristically fascinating, careful, and clever.

Shogenji argues that the central issue at work in Cartesian skepticism is empirical equivalence. We agents have an abundance of evidence that probabilistically supports (i.e., raises the probability of) the "natural world hypothesis" (hNW). But the skeptic's claim is not that there is no such confirming evidence, but that there is no case to be made from our evidence for belief in the natural world in the presence of empirically equivalent, skeptical alternatives. Skeptical hypotheses are constructed in such a way that agents should expect to perceive any possible piece of evidence to the exact same degree whether the natural world hypothesis or this skeptical alternative is true. The salient explication of empirical equivalence at work here is equality of likelihood functions between hNW and skeptical alternatives.

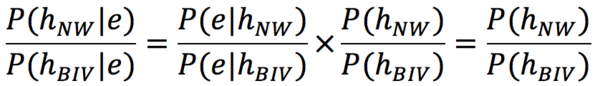

Shogenji considers and dismisses some well-known Bayesian responses to Cartesian skepticism. The simplest reminds the skeptic that the comparative probabilities of hypotheses in light of evidence is determined not only by the hypotheses' likelihood functions, but also by their comparative prior probabilities. Consider a brains-in-vats version of the Cartesian skeptical hypothesis, hBIV. Comparing P(hNW |e) to P(hBIV |e) in terms of their ratio, under the assumption of empirical equivalence, we have:

At this point, the Bayesian must make some case for why this ratio is top-heavy. Such a case might, for example, appeal to a difference in overall theoretical virtue between the two hypotheses (Vogel 2014, Huemer 2016).

No matter how convincing the case, Shogenji argues that this response misses the mark. The skeptic is not trying to argue in favor of hBIV any more than in favor of hNW; rather, the skeptic is arguing that there is no good reason to assent to either. The appropriate comparison then is between hNW and the non-committal hNW ∨ hBIV. So long as hBIV has not been decisively refuted, the disjunctive hypothesis always comes out probabilistically on top in this comparison.

In response, the Bayesian might appeal to a Lockean articulation of assent. Even if hNW ∨ hBIV always is more probable than hNW, both might be sufficiently probable for assent (above some threshold t), P(hNW ∨ hBIV |e) > P(hNW |e)> t. Furthermore, given t > 0.5, this would imply that the mutually exclusive skeptical alternative is not worthy of assent, P(hBIV |e)< t. But Shogenji provides a strong, novel criticism of this type of move. The Lockean format absurdly allows for the "backdoor endorsement" of any otherwise unacceptable hypothesis. This occurs when one deductively infers such a hypothesis from a set of strategically chosen statements, each of which is sufficiently probable for assent.

Shogenji takes this problem to be symptomatic of a more fundamental flaw in the "standard Bayesian format." Overall epistemic worthiness is determined at least by the dual ends of believing truth and avoiding falsehood. By attending only to a hypothesis's posterior probability, the Bayesian format neglects the former component. A disjunction is always deemed worthier of acceptance than one of its disjuncts because it is always a safer bet for avoiding falsehood. Shogenji, by contrast, maintains that an appropriate additional focus on informativeness in believing truths can lead to situations where a disjunction is less epistemically worthy of acceptance than committal to one of the disjuncts alone.

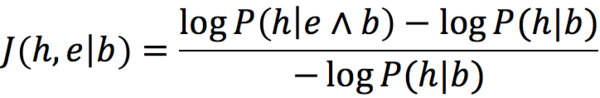

Explicating safety as posterior probability and informativeness as a decreasing function of a hypothesis's prior probability, the search for a measure of epistemic worthiness that balances these considerations mines the vast set of probabilistic relevance measures. Shogenji helpfully shows that a formal desideratum effectively banning "backdoor endorsements" of the sort described above suffices to distinguish a unique (up to ordinal equivalence) measure of epistemic worthiness:

He then proposes his own threshold-based, "dual-component format of epistemic evaluation" (pp. 89-90), which mandates endorsement of a statement h if and only if J (h,e|b) ≥ t.

At this point, Shogenji has applied his meliorative approach and engineered new epistemic resources for avoiding falsehood and believing truth. When applied to the challenge of Cartesian skepticism, do these resources give us a defense of belief in the natural world? Chapter 5 investigates this question and ultimately answers 'no'! The argument primarily turns on Shogenji's formal notion of "supersedure," defined as the satisfaction of the following three conditions:

1. Hypothesis h is sufficiently epistemically worthy to be accepted; J(h,e|b) ≥ t.

2. Hypothesis h* is yet more epistemically worthy to be accepted; J(h*,e|b) > J(h,e|b).

3. In light of h*, h is no longer sufficiently worthy of acceptance; J(h,e|b ∧ h*) < t.

In sum, we say that (the case for) h is superseded by h* when h* undercuts h's epistemic worthiness to such an extent that h is no longer acceptable in light of h*.[1]

Comparing the natural world hypothesis hNW again with hNW ∨ hBIV, Shogenji verifies that hNW is superseded by hNW ∨ hBIV. He concludes that

although we have a strong case for accepting a particular hypothesis about the natural world in the presence of relevant evidence, it is superseded by the disjunction of the hypothesis and its counterpart proposed by the skeptic. We should therefore remain non-committal between any particular hypothesis about the natural world and its counterpart proposed by the skeptic (pp. 120-21).[2]

This is concerning news. But it gets worse. Shogenji points out that the same case can be made to promote a form of inductive skepticism. A non-committal stance toward mutually exclusive hypotheses that bestow equal likelihood on our actual evidence supersedes the case for accepting any of these hypotheses. This approach thus advises us to abandon the unachievable goal of defending particular hypotheses out of a collection of mutually exclusive rivals that render actual evidence equally likely (p. 125). The upshot seems devastating for induction. No matter how many times we've eaten bread and found it nourishing, we cannot rationally infer that bread nourishes, or that the next bit of bread will nourish. This would ignore the fact that these hypotheses are superseded by disjunctive hypotheses agnostic between bread being generally nourishing and bread having been nourishing up until now, while being toxic from this time forward.

Shogenji bites this bullet, concluding that there is no defense of belief in the natural world or induction grounded in the dual-component format of epistemic evaluation! This is not to say that there is no rational basis for accepting the natural world. But if any is to be found, we will have to supplement our epistemological methods, engineering them appropriately.

The new epistemic goal that Shogenji puts in our sights is to believe truth and avoid falsehood by reasoning from probabilities estimated to diverge the least from the true distribution. This goal is a strict strengthening of the previous goal, and so more difficult to achieve. When we use values of J as a criterion for rational acceptance, we work from a probability distribution. But in the dual-component format, there are no restrictions on that underlying distribution. Shogenji suggests we attend first to selecting the best probabilistic model on the basis of a relative-divergence format. The chosen model is combined with the available data in order to generate a probabilistic hypothesis and corresponding distribution. We then apply the dual-component format of epistemic evaluation using the generated distribution.

One might wonder what good this could be for finding a way around the argument for skepticism. The fact of supersedure will still hold so long as we can compare hNW ∨ hBIV to hNW. But it is not the ensuing probabilistic reasoning that allows one to avoid the skeptical argument, but rather the means of selecting the distribution. There are various candidate relative-divergence formats to consider (Shogenji specifically discusses cross-validation techniques and AIC). The crucial property they have in common is that they balance a model's fit with data against its complexity (commonly measured as the number of adjustable parameters in the model). Penalizations for complexity in model selection ultimately provide the case against skepticism.

Consider inductive skepticism first. Shogenji has us imagine an "unconventional slot machine" that, when fed a dollar, displays either blue or green each time one presses a button. After inserting a dollar and pressing the button ten times, we record the following data sequence DL: GGGBGGGGGG. A simple Bernoulli model, BNL, can be used to generate hypothesis h from this data -- where C( ) is the (hypothesized) objective chance function:

BNL: C(G)=p

h: C(G)=0.9

The skeptic might have us consider instead an alternative hypothesis identical up to the tenth button press, but with chances then changing dramatically -- where C(Gx) is the probability that the x'th outcome is a G:

h*: C(Gx)=0.9 for x≤10

C(Gx)=0 for x>10

The disjunctive hypothesis h ∨ h* supersedes the case for h, taking away our ability to generalize or project from the evidence collected.

But how does the skeptic fare relative to the strengthened epistemic goal? Shogenji argues that h* cannot even be considered in our probabilistic reasoning, since it is not generated from the data DL by any probabilistic model. Most obviously, the skeptic might hope to generate h* from the following model:

BNL*: C(Gx)=p for x≤k

C(Gx)=q for x>k

However, BNL* does not generate h* from DL; and it seems that, since we only have ten outcomes recorded in DL, this sequence will not allow any inductively skeptical hypothesis to be generated. The ground for eliminating h* from consideration is thus "the absence of a model that generates h* from the actual body of evidence DL" (p. 161). The skeptical alternative ends up being disfavored, not because it fares poorly in probabilistic reasoning, but because it involves a hypothesis dismissed prior to such reasoning on account of its needless complexity.

Cartesian skepticism is ruled out for similar reasons. Any Cartesian skeptical scenario involves an alternative account of how the natural world is deceptively presented to agents. The account of this process is added, effectively as an additional adjustable parameter, to a description of the content of the natural world. By contrast, hNW contains only the description of the natural world itself, which "provides its own account" of how our experience is produced. The skeptical model provides no better fit with our evidence but it is strictly more complex than hNW. Like the inductive skeptical model above, it is needlessly complex, and so it is barred from consideration.

In the remaining, I want to try a response on behalf of the skeptics. Consider again Shogenji's slot machine and his claim that no model generates h* from the actual body of evidence DL. Without further qualification, this claim is false. Instead of BNL*, consider the following model:

BNL**: C(Gx)=p for x≤10

C(Gx)=0 for x>10

BNL** is indeed a model by Shogenji's lights: "a template that specifies the shape of a theory" (p. 131). Furthermore, it is a model the skeptic would propose, having us entertain the possibility that this machine produces the result G with some objective chance, but only up to the tenth pull, with G being impossible thereafter. Shogenji's method for hypothesis selection would have us select a value p=0.9 here from DL, and so BNL** does generate h* from DL. Moreover, BNL**, like BNL, contains only one adjustable parameter, making these two models equal in complexity. It would seem that BNL** cannot be ruled out in the model selection stage then, which would mean that we again should consider the skeptical hypothesis h* in our dual-component reasoning. And so, we are left again with the worry that h∨h* supersedes the case for h.

Shogenji considers this response and introduces a principle that directly bars considerations of BNL** and any other models that include non-adjustable parameters the values of which are "determined by the present research" (pp. 163-64). But is it really appropriate to hold this principle up against the skeptic? It seems to me no. BNL** exists regardless of how any particular agent discovers it. It is not somehow implicit in BNL** that its non-adjustable parameters were set post-hoc. The question then is, regardless of how an agent came to discover and consider this model, how it fares when held up against BNL.

Indeed, some of Shogenji's own thoughts on model selection can, it seems, be used against him here. Whether BNL** was brought to mind by a post-hoc fixing of parameters in light of DL, such details belong to what Shogenji calls the "context of discovery." He maintains that this is a separate affair entirely than what takes place in the "context of justification." As he writes, "the decision on which models to evaluate is not part of the evaluation. . . . If a model is on the list of candidates to evaluate, then only the data can justify its rejection" (p. 154). Effectively, by banning models from evaluation on account of how they were discovered, Shogenji is mixing the contexts of discovery and justification. His "justifications" of belief in the natural world and induction ultimately refer to features of how the relevant skeptical models were discovered. But then, by Shogenji's own lights, this is not a justification for dismissing skepticism at all, so much as a simple refusal to allow the skeptic's models to be evaluated in the context of justification.[3]

The same response applies on behalf of the Cartesian skeptic. Shogenji faults the skeptical model for having to include some account or another of the process that deceptively presents experience to agents. But so long as we consider a skeptical model that fixes this process (rather than treating it as an additional adjustable parameter), we will have to consider the skeptical hypothesis generated from it by our evidence. It seems that if we are careful to follow Shogenji's advice and not mix contexts of discovery and justification, we will not be able to build his case against skepticism, since we will not be able to rule skeptical hypotheses out at the relative-divergence, model selection stage.

If this is correct, then Shogenji's book may actually guide skeptics to a yet more robust position. To defend skepticism against even Shogenji's newly engineered epistemic resources, the skeptic should be sure to present committed skeptical models, ones that aren't more complex by having more adjustable parameters than their non-skeptical counterparts. The Cartesian skeptic should tell a thoroughly specified production story, one with no adjustable parameters, and thus no added complexity.

REFERENCES

Huemer, M., 2016. "Serious Theories and Skeptical Theories: Why You are Probably Not a Brain in a Vat" Philosophical Studies, 173(4): 1031-1052.

Schupbach, J. N. and D. H. Glass, 2017. "Hypothesis Competition Beyond Mutual Exclusivity" Philosophy of Science, 84(5): 810-824.

Vogel, J., 2014. "The Refutation of Skepticism" in Steup and Turri (eds.), Contemporary Debates in Epistemology, 108-120. John Wiley and Sons, 2nd edition.

[1] Shogenji's notion of supersedure is a special case of what Schupbach and Glass (2017) have described as the "indirect path" to competition, occurring "to the extent that adopting either hypothesis undermines the support that the relevant body of evidence provides for the other" (p. 812).

[2] Shogenji goes on to apply the notion of supersedure again in proving an important, more general result: that "we cannot select and defend any hypothesis from a set of empirically equivalent, rival [read mutually exclusive] hypotheses" (p. 121).

[3] Shogenji insists that models like BNL** are banned, not because of how they were discovered, but rather to preserve "the distinction between models and theories that is crucial to guarding against overfitting" (p. 163). Without a principle banning post-hoc fixing of parameters, one can fix all parameters post-hoc, effectively converting any model into a theory with no penalties for complexity (i.e., with no adjustable parameters). But this again seems to me substantively to involve details from the context of discovery. Leaving these out, the fact remains that BNL** is a model that we might be asked to consider—regardless of whether it was developed post-hoc or not. Also, notably Shogenji does nothing to show that any principle that would successfully preclude the collapsing of models into theories would have to ban relevant models like BNL** from consideration (even if his principle would).