On page 1, Gábor Hofer-Szabó, Miklós Rédei, and László E. Szabó describe the Principle of Common Cause, and then point to a number of alleged counterexamples that exist in the literature. On page 2, they then state the main aim of the book:

The main message of this book is that assessing the status of the Principle of the Common Cause is a very subtle matter requiring a careful investigation of both the principle itself and the evidence for/against it provided by our best scientific theories.

Specifically, the arguments from the above-mentioned counterexamples to the failure of the Common Cause Principle are too quick. What makes this perilous speed possible is in part the ambiguity and vagueness of the counterexamples in question: almost invariably, the probabilistic framework in which the counterexamples would be well-defined is not specified explicitly; this has the consequence that the problem of validity and falsifiability of the Common Cause Principle does not get a conceptually and technically sharp formulation.

This is an excellent project. But does this book provide a solution to this problem? The authors' attempted solution is founded on a technically sharp definition of 'common cause' precisely stated in terms of four probabilistic conditions, which I will explain later. This definition introduces the precision that enables the authors to state and prove a series of mathematical theorems, which takes up most of the book. These theorems are technically sharp, but are they accurate? Do they solve the philosophical problem summarized in the two paragraphs quoted above? Do these theorems help to determine the status of the Principle of the Common Cause? The theorems are theorems of probability theory, yet the authors describe the results in terms of the notion of 'common cause'. This is legitimate only if the notion 'common cause' can be reduced to a set of probabilistic conditions in the way the authors claim. The aim of this review is to explain why their definition is not correct.

The definition is not just a little bit incorrect! It cannot be corrected by some simple modification of the definition, or adjustment of the language. Almost the whole book is based on this definition. For this reason, the authors' claims about the philosophical import of their mathematical theorems not only are mostly incorrect, but also it is not easy to say what the correct philosophical import of the theorems really is. For those interested in mathematics for its own sake, there are some interesting theorems in probability theory. For those interested in the philosophical problems, extracting the lessons from this book will be a non-trivial task.

I will begin by explaining the Principle of Common Cause in terms of a simple example (section 2) and then (in section 3) document how the authors' mistaken definition of 'common cause' traces back to Reichenbach (1956). Later, I will examine the definition of 'common cause' and explain why it is wrong (sections 4 and 5). Section 6 sums up by tracing the mistake back to the distinction between the Causal Markov Condition and the Causal Faithfulness Condition, which is widely discussed in the contemporary causation literature.

1. The geyser example

"Suppose two geysers which are not far apart spout irregularly, but throw up their columns of water always at the same time. The existence of a subterranean connection of the two geysers with a common reservoir of hot water is then practically certain." (Reichenbach (1956), p. 158.) The idea is this. If the spouting of one geyser is probabilistically dependent on the other, and neither causes the other, then there must exist a common cause, such that . . . (some conditions). The conditions here are generally known as screening off conditions.

As we shall see, these conditions are used by the authors to define the notion of 'common cause'. For now, we shall ignore these conditions. Let us adopt the following abbreviations.

A1: Geyser a spouts at time t. Ā1: Geyser a does not spout at time t.

B1: Geyser b spouts at time t. B̄1: Geyser b does not spout at time t.

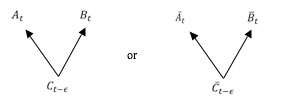

Usually, at any particular time t, either both geysers spout, or neither spouts. The repetition of this fact over many instances supports the conclusion that at each time t the events are probabilistically correlated. We express this by the formal statement that, for all times t, P(At,Bt)>P(At)P(Bt). The common cause explanation is that, at each of these times, at the short time before they both spout, there was a high pressure build in the underground reservoir to which they are both connected. This is illustrated by the causal diagram below.

2. Reichenbach's Definition of Conjunctive Fork

The geyser example illustrates a general principle that we call the Principle of Common Cause (PCC). Here's how Reichenbach (1956) motivates this idea. First, he refers to the causal diagrams like the ones above as conjunctive forks. Since we are considering some fixed time, t, I have dropped the subscripts. The probabilities in the following quote have been changed into contemporary notation. He says (Reichenbach (1956), p. 159):

In order to explain the coincidence of A and B, which has a probability exceeding that of a chance coincidence [P(A,B)>P(A)P(B)], we assume that there exists a common cause C. . . . We will now introduce the assumption that the fork ABC satisfies the following relations:

P(A,B|C)=P(A|C)P(B|C) (5)

P(A,B|C̄)=P(A|C̄)P(B|C̄) (6)

P(A|C)>P(A|C̄) (7)

P(B|C)>P(B|C̄) (8)

It will be shown that the relation P(A,B)>P(A)P(B) is derivable from these relations. For this reason, we shall say that the relations (5)–(8) define a conjunctive fork, that is, a fork which makes the conjunction of the two events A and B more frequent that it would be for independent events.

The two conditional independence conditions, (5) and (6), are often described in the philosophical literature as "C screens off A from B" and "C̄ screens off A from B." The reason why a common cause "screens off" its effects is explained in terms of the following kind of example (van Fraassen (1982a), p. 27).

Suppose there is a correlation between two (sorts of) events, such as lung cancer and heavy smoking. That is, a correlation in the simultaneous presence of two factors: having lung cancer now and being a heavy smoker now. An explanation that has at least the form to satisfy us traces both back to a common cause (in this case, a history of smoking which both produced the smoking habit and irritated the lungs). Characteristic of such a common cause is that, relative to it, the two events are independent. Thus present smoking is a good indication of lung cancer in the population as a whole; but it carries no information of that sort for people whose past smoking history is already known.

Earlier, I quoted Reichenbach in full because, in this passage, Reichenbach has introduced a confusion that has already affected the philosophical literature for many years, and will affect it for many years to come. I am concerned here only with one effect it has had, namely the effect it has had on the book under review.

3. Why Is the Definition Wrong?

Here's what's wrong. Reichenbach begins with the notion of a conjunctive fork defined by the causal diagram above. The notion of a conjunctive fork is a purely causal notion. But then he uses the probability relations in (5)–(8) to define a conjunctive fork. If he means by this that (5)–(8) are sufficient conditions for any causal relations amongst ABC to be a conjunctive fork, then this is a mistake. Some of the reasons were already known to Reichenbach; in fact, they were published in the same book. It must be remembered that Reichenbach's book was published posthumously, so if he had lived, he may well have revised this section in light of what he learnt in writing other parts of the book.

Unfortunately, the authors of The Principle of Common Cause accept this definition at face value. Here is my paraphrase (Hofer-Szabó et al. (2013), p. 13):

Definition 2.4: C is a common cause of the correlation (P(A,B)>P(A)P(B)) if conditions (5)–(8) hold.

They immediately point out that "The above definition was first given by Reichenbach (1956)" (p. 13). As we have seen, the mistake does trace back to Reichenbach (1956), but that does not make it less of a mistake.

We may begin to see why the definition is mistaken if we note that conditions (7) and (8) express probabilistic correlations. Probabilistic correlations are completely symmetrical, so in particular, (7) could be rewritten equivalently as (7') P(C|A)>P(C|Ā). Then we see that conditions (5)–(8) are also the conditions that apply to the causal diagram A → C → B. The conditional independence conditions (5) and (6) say, in this case, that A only acts on B via C; that is, there is no causal path from A to B except the one that passes through C. In terms of conditional independence, given that we already know the state of the intermediate cause C; knowing A does not provide any additional information about B. Thus, the conjunction of conditions (5)–(8) is not a sufficient condition for a conjunctive fork. In terms of what's been shown so far, at best, the conjunction of (5)–(8) is a sufficient condition for either a conjunctive fork A ← C → B or an linear causal structure A → C → B.

Bas van Fraassen and Frank Arntzenius have also adopted something close to Definition 2.4 (Van Fraassen (1982b), Arntzenius (1993)), with one important difference. They added the condition that the event C occur at an earlier time than either A or B. If one believes that causes always precede their effects, then this added condition rules out the linear causal structure A → C → B.

Unfortunately, this added condition does not solve the problem. The following example shows that conditions (5)–(8) are consistent with there being no causal relation from C to A at all! Consider the case in which C causes B. For the sake of mathematical simplicity, suppose that C is a necessary and sufficient cause for B, which means that P(B|C)=1 and P(B|C̄)=0. Further suppose that event A is totally causally unconnected with B and C, or any event defined in terms of them. This implies that A is probabilistically independent of any event defined in terms of B and C. Keep in mind that the example has been constructed so that the only causal relation is from C to B. There are no other causal relationships. Yet, if we replace the event A with another event A' defined as A≡B, read 'A if and only if B', then there can exist a probabilistic correlation between A' and B such that, according to Definition 2.4, C is a common cause of A' and B. So much the worse for the definition, for there is, by hypothesis, no such causal relationship. C is not a common cause of A' and B because C is not a cause of A'.

The argument in the previous paragraph assumes that there is such a probability distribution. We begin by assuming that C causes B such that

P(B|C)=1 and P(B|C̄)=0.

Next, we need to ensure that A is probabilistically independent of B (and therefore probabilistically independent of C as well, since B and C are perfectly correlated). Let's introduce some positive integer n, and put P(A)=1-2/n, P(Ā)=2/n, and P(B)=1/2=P(B̄). To ensure the probabilistic independence of A and B, we set

P(A,B=P(A)P(B)=1/2-1n=P(A)P(B̄)=P(A,B̄),

P(Ā,B)=P(Ā)P(B)=1/n=P(Ā)P(B̄)=P(Ā,B̄).

Now, notice that P(A')=P(A,B)+P(Ā,B̄)=1/2. Therefore, P(A')P(B)=1/4. But P(A',B)=P(A,B)=1/2-1/n. Therefore, A' and B are positively correlated if n > 4.

Quantitatively,

P(A',B)-P(A')P(B)=1/4-1/n>0 if n > 4.

For example, if n = 10, the correlation is 0.24, which is quite large. Finally, we have to check that A' and B are probabilistically independent given C, or given C̄. This is automatically satisfied due to the perfect correlation between C and B. In particular, since P(B|C)=1,

P(A',B|C)=P(A'|C)=P(A'|C)P(B|C).

Also, since P(B|C̄)=0,

P(A',B|C̄)=0=P(A'|C̄)P(B|C̄).

Therefore, the triple (A', B, C) satisfies all the conditions (5)–(8) (if, for example, n = 10), even though C is not a common cause of A' and B.

One might respond by saying that this is a counterexample to the Principle of Common Cause. Indeed, it is exactly one kind of example that Arntzenius (Arntzenius (1993), Arntzenius (2010)) presents as a counterexample to the Principle of Common Cause. However, this is not the response that Hofer-Szabó et al. want to make; they want to defend the Principle of Common Cause. Nor can they respond in this way if they are committed to Definition 2.4, for that definition says that there is a common cause of the correlation.

What should defenders of the Principle of Common Cause (such as this reviewer) say in response to this example if they do not want to accept Definition 2.4? In my opinion, the proper response is that it is a counterexample to one possible formulation of the Principle of Common Cause; it shows that the principle needs to be reformulated. This issue is well known, so let me quote Hitchcock (2012)(pp. 14-15):

[The Principle of Common Cause] may fail if events A and B are not distinct. . . . For example, it may fail if A and B are logically related, or involve spatiotemporal overlap . . . . [Suppose] that I am throwing darts at a target, and that my aim is sufficiently bad that I am equally likely to hit any point on the target. Suppose that a and b are regions of the target that almost completely overlap. Let A be the event of the dart's landing in region a, B the dart's landing in region b. Now, A and B will be correlated, and there may well be no cause that screens them off.

The example that I just used against Definition 2.4 is one in which the events A' and B are not distinct because they are logically related; recall that A' is defined as A≡B. The solution is not to insist that there is a cause where there is none. The solution is to restrict the Principle of Common Cause to apply to correlations between events that are distinct; that is, when events are not logically related and do not involve spatiotemporal overlap.

This kind of defense of the Principle of Common Cause is exactly what Hofer-Szabó et al. hoped to avoid. As soon as such restrictions are imposed on A and B, the Common Cause Principle does not "get a conceptually and technically sharp formulation" (p. 2), as they put it. In particular, it is no longer possible to formulate the Common Cause Principle simply in terms of four probabilistic conditions and prove theorems about it in probability theory.

4. Why the Definition is Also Not a Necessary Condition

The authors present the conditions in Definition 2.4 as a sufficient condition for a common cause (as indicated by their use of the word 'if', rather than 'only if', or 'if and only if'). In the previous section, I argued that the conditions in Definition 2.4 are not sufficient conditions for a common cause. However, if the conditions in Definition 2.4 were necessary conditions for a common cause, at least some of their results might be philosophically useful (the ones that rely on the 'only if' part of the definition). The example in this section is designed to show that the conditions in Definition 2.4 are not necessary conditions for a common cause either.

We need an example in which there is a common causal structure, but the conditions in Definition 2.4 do not all hold. It is sufficient to construct an example in which the two effects are uncorrelated, for the conditions in Definition 2.4 entail that the two effects must be correlated, so at least one of those conditions must fail.

Suppose we toss a fair coin. If it lands heads, then two lights will flash the same color (either green-green or red-red). On the other hand, if the coin lands tails, then the two lights will flash different colors (either green-red or red-green). Whether the light on the left will flash green or red will be decided by the toss of a second (independent) coin. If the second coin lands heads, then the left light will flash green; if it lands tails, it will flash red. So, the two coin tosses together determine the color of both lights. The light flashes are the effects of a common cause, namely the outcomes of the coin tosses. Since the coin tosses are fair and independent, each possible outcome, HH, HT, TH, and TT, is equally probable, so each has a probability of ¼. This implies that the light flashes are probabilistically independent, which proves that no matter how one defines an event C, at least one of the conditions in Definition 2.4 must fail. Therefore, Definition 2.4 provides neither sufficient nor necessary conditions for a common cause.

5. Concluding Remark

This book is representative of a large body of literature in philosophy of physics that applies Reichenbach's ideas to issues in the foundations of physics, especially to understanding Bell's argument for the non-locality in a quantum world (beginning with van Fraassen (1982a)and Van Fraassen (1982b)). Philosophers of physics have mostly focused on ideas that can be traced back to Reichenbach (1956), and have tended to ignore another important part of the literature on probabilistic causation. I have in mind the approach to probabilistic causation based on path analysis, which originated with Wright (1921), and was subsequently developed and generalized by Pearl (2000), as well as Spirtes, Glymour and Scheines (2000). This approach is founded on what is now called the Causal Markov Condition.

The key to understanding why the authors' definition of common cause (Definition 2.4) is mistaken lies in clearly understanding what the Causal Markov Condition does, and does not, say. Pearl (2000)introduced a notion commonly known as d-separation. It's not important exactly how this notion is defined (but see Zhang (2013)for an excellent introduction). The important point is only that d-separation is a property of a causal diagram -- it has nothing whatsoever to do with probability. The Causal Markov Condition then introduces a connection from d-separation to probabilistic independence. That is, it says that if a d-separation relation holds, then a corresponding probabilistic independence relation holds. It's important to understand the direction of the entailment. It takes you from a causal fact to a fact about probabilistic independence, and not the other way around. The condition that takes you in the other direction, from a probabilistic independence relation to a d-separation relation, is called the Causal Faithfulness Condition. The truth of Causal Markov Condition is widely upheld, but everyone agrees that the Faithfulness Condition is false.

Note that the Causal Markov Condition can be equivalently expressed in terms of its contrapositive. In that form, it says that if certain probabilistic dependence relations hold, then certain causal facts must hold. The Principle of Common Cause is exactly of this form, which allows that the Principle of Common Cause follows from the Causal Markov Condition (see, for example, Hausman and Woodward (1999)).

What consequences does this have in the present discussion? When we look at the four conditions in Definition 2.4, we see that two of them are probabilistic independence conditions, while two of them are probabilistic dependence conditions. Thus, in one direction, the probabilistic independence relations may follow from causal facts, but not the probabilistic dependence relations. In the other direction, the probabilistic dependences can entail causal facts, but the probabilistic independences do not. Therefore, Definition 2.4 cannot be a necessary and sufficient condition for common cause. To think otherwise would be to think that the Causal Faithfulness Condition is true. Unsurprisingly, the example in the previous section is exactly an example in which Faithfulness fails.

That explains why the many theorems stated and proven in Hofer-Szabó et al.are difficult to interpret philosophically. And it explains why the results, as they are described in the book, should not be taken at face value.

REFERENCES

Arntzenius, F. 1993. The Common Cause Principle. In PSA 1992, 227-237. East Lansing, Michigan: Philosophy of Science Association.

--- (2010). Reichenbach's Common Cause Principle. Stanford Encyclopedia of Philosophy, 1-27.

Hausman, D. M. & J. Woodward (1999). Independence, Invariance and the Causal Markov Condition. The British Journal for the Philosophy of Science, 50, 521-583.

Hitchcock, C. (2012) Probabilistic Causation. Stanford Encyclopedia of Philosophy.

Pearl, J. 2000. Causality: Models, Reasoning, and Inference. Cambridge University Press.

Reichenbach, H. 1956. The Direction of Time. Berkeley: University of California Press.

Spirtes, P., C. Glymour & R. Scheines. 2000. Causation, Prediction, and Search. Boston: MIT Press.

van Fraassen, B. C. (1982a) The Charybdis of realism: Epistemological implications of bell's inequality. Synthese, 52, 25-38.

--- 1982b. Rational Belief and the Common Cause. In What? Where? When? Why?: Essays on induction, space and time, explanation, ed. R. McLaughlin, 193-227. D. Reidel.

Wright, S. (1921) Correlation and Causation. Journal of Agricultural Research, 20, 557-585.

Zhang, J. (2013) A Comparison of Three Occam's Razors for Markovian Causal Models. The British Journal for the Philosophy of Science, 64, 423-448.